Özer, Demet

Loading...

Profile URL

Name Variants

O., Demet

ÖZER, DEMET

Demet Ozer

Özer, DEMET

Özer, D.

Özer, Demet

ÖZER, Demet

Demet ÖZER

D. Ozer

Ozer, D.

Ozer,D.

Demet Özer

DEMET ÖZER

O.,Demet

Ozer, Demet

Özer,D.

Ö., Demet

Ozer,Demet

D. Özer

Demet, Ozer

ÖZER, DEMET

Demet Ozer

Özer, DEMET

Özer, D.

Özer, Demet

ÖZER, Demet

Demet ÖZER

D. Ozer

Ozer, D.

Ozer,D.

Demet Özer

DEMET ÖZER

O.,Demet

Ozer, Demet

Özer,D.

Ö., Demet

Ozer,Demet

D. Özer

Demet, Ozer

Job Title

Dr. Öğr. Üyesi

Email Address

Main Affiliation

Psychology

Status

Current Staff

Website

ORCID ID

Scopus Author ID

Turkish CoHE Profile ID

Google Scholar ID

WoS Researcher ID

Sustainable Development Goals

SDG data is not available

Documents

17

Citations

183

h-index

6

This researcher does not have a WoS ID.

Scholarly Output

11

Articles

8

Views / Downloads

85/150

Supervised MSc Theses

2

Supervised PhD Theses

0

WoS Citation Count

30

Scopus Citation Count

33

WoS h-index

3

Scopus h-index

4

Patents

0

Projects

0

WoS Citations per Publication

2.73

Scopus Citations per Publication

3.00

Open Access Source

3

Supervised Theses

2

Google Analytics Visitor Traffic

| Journal | Count |

|---|---|

| Quarterly Journal of Experimental Psychology | 3 |

| Dilbilim Arastirmalari Dergisi | 1 |

| Emotion Review | 1 |

| Conference on Human Factors in Computing Systems - Proceedings | 1 |

| Journal of Experimental Psychology-General | 1 |

Current Page: 1 / 2

Scopus Quartile Distribution

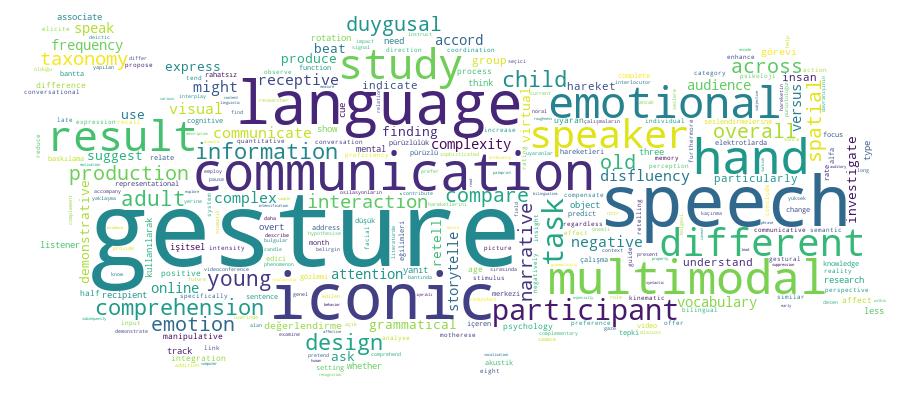

Competency Cloud

11 results

Scholarly Output Search Results

Now showing 1 - 10 of 11

Conference Object Citation - Scopus: 5Studying Children's Object Interaction in Virtual Reality: a Manipulative Gesture Taxonomy for Vr Hand Tracking(Association for Computing Machinery, 2023) Baykal, G.E.; Leyleko?lu, A.; Arslan, S.; Özer, D.In this paper, we propose a taxonomy for the classification of children's gestural input elicited from spatial puzzle play in VR hand tracking. The taxonomy builds on the existing manipulative gesture taxonomy in human-computer interaction, and offers two main analytical categories; Goal-directed actions and Hand kinematics as complementary dimensions for analysing gestural input. Based on our study with eight children (aged between 7-14), we report the qualitative results for describing the categories for analysis and quantitative results for their frequency in occurring in children's interaction with the objects during the spatial task. This taxonomy is an initial step towards capturing the complexity of manipulative gestures in relation to mental rotation actions, and helps designers and developers to understand and study children's gestures as an input for object interaction as well as an indicator for spatial thinking strategies in VR hand tracking systems. © 2023 Owner/Author.Master Thesis Başkalarının Davranışlarını Gözlemlerken Ölçülen Mu Baskılaması ile Çeşitli Uyaran Özellikleri Arasındaki İlişkinin İncelenmesi(2024) Badakul, Ayşe Nur; Özer, Demet; Soyman, EfeMu baskılanması, merkezi elektrotlarda bir hareketin gözlemi ve gerçekleştirilmesi sırasında nöral osilasyonların desenkronizasyonu ile karakterize edilen, insan beynindeki ayna nöron sisteminin göstergesi olarak, önemli bir araştırma noktasıdır. Alanyazındaki önemli bir eksiklik, çalışmaların çoğunluğun, geçmiş çalışmalardan sonra mu osilasyonlarını geleneksel alfa frekans aralığında (8-13 Hz) nicelendirmesidir. Bu çalışmada, literatürde genellikle göz ardı edilen çalışmaların dağınık bulgularından esinlenerek, el, yüz ve yapay desen hareketlerini içeren hareket videolarının gözlemi esnasındaki osilasyonel gücünün dinamikleri veri güdümlü bir yaklaşımla incelenmiştir. Çalışmaya, 19 ile 34 yaşları arasında (Ortalama=22, SD=3; kadın/erkek=24/6) toplam 30 katılımcı alınmıştır. EEG kayıtları, hareket değerlendirme görevi tamamlanırken, uluslararası 10-20 sistemini takip eden bir kapa yerleştirilmiş 32 aktif elektrotla Brain Vision actiCHamp sistemi kullanılarak Faraday kabininde toplanmıştır. Bu hareket değerlendirme görevinde kullanılmak üzere, el, yüz veya desen hareketlerini betimleyen 156 gri tonlamalı video içeren bir uyaran kümesi oluşturulmuştur. Hareket değerlendirme görevi, dört blokta yarı-random olarak dağıtılan 312 deneme için her biri 156 video uyaranın iki tekrarını içermektedir. Tüm denemelerin 1/4'ünde (78 deneme) video sona erdikten sonra katılımcılara bir hareket değerlendirme ekranı sunulmuş ve katılımcılar 9 puanlık bir ölçekte değerlendirmelerini yapmıştır. Yapılan zaman-frekans analizleri, hareketlerin gözlemi sırasında sadece düşük mu alt bantında (8-10.5 Hz) merkezi EEG elektrotlarındaki nöral osilasyonlarda anlamlı bir baskılama olduğunu göstermiştir. Ancak yüksek mu alt bantında (10.5-13 Hz) baskılama görülmemiştir. Alfa osilasyonlarındaki alt bant farklılaşması, oksipital elektrotlardan kaydedilen alfa osilasyonlarında belirgin olarak görülmemiştir. Düşük alt bantta, sensori-motor korteksin el bölgesi üzerinde yer alan merkezi elektrotlarda el hareketleri için ve yüz bölgesi üzerinde yer alan frontal-temporal elektrotlarda yüz hareketleri için belirgin ve seçici olarak daha güçlü baskılamalar bulunmuştur. Yüksek alt bantta, seçici baskılama sadece frontal-temporal elektrotlarında yüz hareketleri için görülmüştür. Ayrıca, düşük alt bantta osilasyonların, videolarda gösterilen biyolojik hareketin zamansal modellemesiyle uyumlu olduğu görülmüştür.Article Citation - WoS: 13Citation - Scopus: 12Gesture use in L1-Turkish and L2-English: Evidence from emotional narrative retellings(Sage Publications Ltd, 2023) Ozder, Levent Emir; Ozer, Demet; Goksun, TilbeBilinguals tend to produce more co-speech hand gestures to compensate for reduced communicative proficiency when speaking in their L2. We here investigated L1-Turkish and L2-English speakers' gesture use in an emotional context. We specifically asked whether and how (1) speakers gestured differently while retelling L1 versus L2 and positive versus negative narratives and (2) gesture production during retellings was associated with speakers' later subjective emotional intensity ratings of those narratives. We asked 22 participants to read and then retell eight emotion-laden narratives (half positive, half negative; half Turkish, half English). We analysed gesture frequency during the entire retelling and during emotional speech only (i.e., gestures that co-occur with emotional phrases such as happy). Our results showed that participants produced more representational gestures in L2 than in L1; however, they used more representational gestures during emotional content in L1 than in L2. Participants also produced more co-emotional speech gestures when retelling negative than positive narratives, regardless of language, and more beat gestures co-occurring with emotional speech in negative narratives in L1. Furthermore, using more gestures when retelling a narrative was associated with increased emotional intensity ratings for narratives. Overall, these findings suggest that (1) bilinguals might use representational gestures to compensate for reduced linguistic proficiency in their L2, (2) speakers use more gestures to express negative emotional information, particularly during emotional speech, and (3) gesture production may enhance the encoding of emotional information, which subsequently leads to the intensification of emotion perception.Article Citation - WoS: 4Multimodal Language in Child-Directed Versus Adult-Directed Speech(Sage Publications Ltd, 2023) Kandemir, Songul; Ozer, Demet; Aktan-Erciyes, AsliSpeakers design their multimodal communication according to the needs and knowledge of their interlocutors, phenomenon known as audience design. We use more sophisticated language (e.g., longer sentences with complex grammatical forms) when communicating with adults compared with children. This study investigates how speech and co-speech gestures change in adult-directed speech (ADS) versus child-directed speech (CDS) for three different tasks. Overall, 66 adult participants (M-age = 21.05, 60 female) completed three different tasks (story-reading, storytelling and address description) and they were instructed to pretend to communicate with a child (CDS) or an adult (ADS). We hypothesised that participants would use more complex language, more beat gestures, and less iconic gestures in the ADS compared with the CDS. Results showed that, for CDS, participants used more iconic gestures in the story-reading task and storytelling task compared with ADS. However, participants used more beat gestures in the storytelling task for ADS than CDS. In addition, language complexity did not differ across conditions. Our findings indicate that how speakers employ different types of gestures (iconic vs beat) according to the addressee's needs and across different tasks. Speakers might prefer to use more iconic gestures with children than adults. Results are discussed according to audience design theory.Master Thesis Akustik Pürüzlülük ve Duygusal Seslendirmelerin Yaklaşma- Kaçınma Motivasyonlarına Olan Etkisinin İncelenmesi(2024) Demir, Pınar; Soyman, Efe; Özer, DemetÇevremiz, otomatik olarak en uygun tepkiyi seçmemizi gerektiren hoş, iştah açıcı veya hoş olmayan, tehdit edici uyaranlarla çevrilidir. Literatürde sözel uyaranlar ve duygusal yüz ifadeleri için duygulanım-uyumlu yaklaşma-kaçınma motivasyonlarının otomatik olarak etkinleştirildiği tutarlı bir şekilde rapor edilmiştir. Ancak duygusal işitsel uyaranlar kullanılarak yaklaşma ve kaçınma eğilimleri üzerine yapılan araştırmalar nispeten azdır. Mevcut tez çalışması, duygusal bilgi aktaran veya potansiyel olarak dinleyicide olumsuz algılara yol açan çeşitli insan seslendirmelerine yanıt olarak yaklaşma-kaçınma motivasyonlarını araştırmayı amaçlamıştır. Çalışma 1'de, Yaklaşma-Kaçınma Görevi ve Cinsiyet Değerlendirme Görevi kullanılarak insan duygusal seslendirmelerine tepki olarak yaklaşma-kaçınma eğilimleri araştırılmıştır. Bulgular, Yaklaşma-Kaçınma Görevindeki uyumluluk etkilerini ortaya koyarak duygusal işitsel uyaranları kullanan önceki çalışmaları tekrarlamıştır. Çalışma 2'de, işitsel sinyallerin algısal olarak rahatsız edici ve tehlike çağrıştıran özelliği olan akustik pürüzlülüğe yanıt olarak yaklaşma-kaçınma eğilimleri, nötr ve pürüzlülük eklenmiş insan seslendirmeleri kullanılarak araştırılmıştır. Bulgular, yanıtlar akustik pürüzlülüğün açık bir şekilde değerlendirilmesine bağlı olduğunda ne rahatsız edici pürüzlü insan seslendirmelerine karşı kaçınma davranışının ne de nötr seslendirmelere yaklaşma davranışının bulunduğunu göstermiştir. Bunun yerine, pürüzlü seslere yanıt olarak tepki hızında genel bir artış gözlemlenmiştir. Pürüzlü seslere tepki hızının artmasının açık değerlendirmelerden kaynaklanıp kaynaklanmadığını daha ayrıntılı olarak incelemek için, Çalışma 3'te katılımcılardan pürüzlülük yerine sesteki sesli harfleri değerlendirmeleri istenen bir kontrol görevi uygulanmıştır. Göreve ilişkin yönergenin değiştirilmesi, pürüzlülüğün genel yanıt kolaylaştırma etkisini tamamen ortadan kaldırmıştır. Üç deneyde hem duygusal seslendirmelerin hem de akustik pürüzlülük içeren seslerin duygusal değerlik, uyarılmışlık ve rahatsız edicilik açısından benzer uyaran özelliklerini paylaşmasına rağmen örtük davranışsal etkilerinin farklı olduğu gösterilmiştir.Article Citation - WoS: 3Citation - Scopus: 4Exploring Emotions Through Co-Speech Gestures: the Caveats and New Directions(Sage Publications inc, 2024) Aslan, Zeynep; Ozer, Demet; Goksun, TilbeCo-speech hand gestures offer a rich avenue for research into studying emotion communication because they serve as both prominent expressive bodily cues and an integral part of language. Despite such a strategic relevance, gesture-speech integration and interaction have received less research focus on its emotional function compared to its cognitive function. This review aims to shed light on the current state of the field regarding the interplay between co-speech hand gestures and emotions, focusing specifically on the role of gestures in expressing and understanding both others' and one's own emotions. The article concludes by addressing current limitations in the field and proposing future directions for researchers investigating gesture-emotion interaction. Our goal is to provide a roadmap to researchers in their exploration of the role of gestures in emotions, ultimately contributing to a more comprehensive understanding of how gestures and emotions intersect.Article Citation - Scopus: 3Multimodal Language in Child-Directed Versus Adult-Directed Speech(SAGE Publications Ltd, 2024) Kandemir,S.; Özer,D.; Aktan-Erciyes,A.Speakers design their multimodal communication according to the needs and knowledge of their interlocutors, phenomenon known as audience design. We use more sophisticated language (e.g., longer sentences with complex grammatical forms) when communicating with adults compared with children. This study investigates how speech and co-speech gestures change in adult-directed speech (ADS) versus child-directed speech (CDS) for three different tasks. Overall, 66 adult participants (Mage = 21.05, 60 female) completed three different tasks (story-reading, storytelling and address description) and they were instructed to pretend to communicate with a child (CDS) or an adult (ADS). We hypothesised that participants would use more complex language, more beat gestures, and less iconic gestures in the ADS compared with the CDS. Results showed that, for CDS, participants used more iconic gestures in the story-reading task and storytelling task compared with ADS. However, participants used more beat gestures in the storytelling task for ADS than CDS. In addition, language complexity did not differ across conditions. Our findings indicate that how speakers employ different types of gestures (iconic vs beat) according to the addressee’s needs and across different tasks. Speakers might prefer to use more iconic gestures with children than adults. Results are discussed according to audience design theory. © Experimental Psychology Society 2023.Article Citation - WoS: 8Citation - Scopus: 7Gestures Cued by Demonstratives in Speech Guide Listeners' Visual Attention During Spatial Language Comprehension(Amer Psychological Assoc, 2023) Ozer, Demet; Karadoller, Dilay Z.; Ozyurek, Asli; Goksun, TilbeGestures help speakers and listeners during communication and thinking, particularly for visual-spatial information. Speakers tend to use gestures to complement the accompanying spoken deictic constructions, such as demonstratives, when communicating spatial information (e.g., saying The candle is here and gesturing to the right side to express that the candle is on the speaker's right). Visual information conveyed by gestures enhances listeners' comprehension. Whether and how listeners allocate overt visual attention to gestures in different speech contexts is mostly unknown. We asked if (a) listeners gazed at gestures more when they complement demonstratives in speech (here) compared to when they express redundant information to speech (e.g., right) and (b) gazing at gestures related to listeners' information uptake from those gestures. We demonstrated that listeners fixated gestures more when they expressed complementary than redundant information in the accompanying speech. Moreover, overt visual attention to gestures did not predict listeners' comprehension. These results suggest that the heightened communicative value of gestures as signaled by external cues, such as demonstratives, guides listeners' visual attention to gestures. However, overt visual attention does not seem to be necessary to extract the cued information from the multimodal message.Article Citation - Scopus: 1Grammatical Complexity and Gesture Production of Younger and Older Adults;(Dilbilim Dernegi, 2023) Arslan,B.; Özer,D.; Göksun,T.Age-related effects are observed in both speech and gesture production. Older adults produce grammatically fewer complex sentences and use fewer iconic gestures than younger adults. This study investigated whether gesture use, especially iconic gesture production, was associated with the syntactic complexity within and across younger and older age groups. We elicited language samples from these groups, using a picture description task (N=60). Results suggested shorter and less complex speech for older than younger adults. Although the two age groups were similar in overall gesture frequency, older adults produced fewer iconic gestures. Overall gesture frequency, along with participants’ ages, negatively predicted grammatical complexity. However, iconic gesture frequency was not a significant predictor of complex syntax. We conclude that each gesture might carry a function in a coordinated multimodal system, which might, in turn, influence speech quality. Focusing on individual differences, rather than age groups, might unravel the nature of multimodal communication. © 2023 Dilbilim Derneği, Ankara.Article Citation - WoS: 1Citation - Scopus: 1The Link Between Early Iconic Gesture Comprehension and Receptive Language(Wiley, 2024) Dogan, Isil; Ozer, Demet; Aktan-Erciyes, Asli; Furman, Reyhan; Demir-Lira, O. Ece; Ozcaliskan, Seyda; Goksun, TilbeChildren comprehend iconic gestures relatively later than deictic gestures. Previous research with English-learning children indicated that they could comprehend iconic gestures at 26 months, a pattern whose extension to other languages is not yet known. The present study examined Turkish-learning children's iconic gesture comprehension and its relation to their receptive vocabulary knowledge. Turkish-learning children between the ages of 22- and 30-month-olds (N = 92, M = 25.6 months, SD = 1.6; 51 girls) completed a gesture comprehension task in which they were asked to choose the correct picture that matched the experimenter's speech and iconic gestures. They were also administered a standardized receptive vocabulary test. Children's performance in the gesture comprehension task increased with age, which was also related to their receptive vocabulary knowledge. When children were categorized into younger and older age groups based on the median age (i.e., 26 months-the age at which iconic gesture comprehension was present for English-learning children), only the older group performed at chance level in the task. At the same time, receptive vocabulary was positively related to gesture comprehension for younger but not older children. These findings suggest a shift in iconic gesture comprehension at around 26 months and indicate a possible link between receptive vocabulary knowledge and iconic gesture comprehension, particularly for children younger than 26 months.